Archive

Statement Code Coverage Testing – Part 2

Back in November 2009 I posted the “UniBasic Code Coverage” project as an open-source project. Back then it was stripped version based on one I set up for my then employer. The version for my employer used an in-house pre-processor that greatly simplified the work I needed to do for it work with our source files.

I have now released the v0.2 (update: v0.8) development version which has fixed several bugs, added the ability to specific a customer pre-process for those don’t use string UniBasic and provided improved the documentation on installing, using and contributing.

As you will already be aware, the source code for this is hosting on the UniBasic Code Coverage Project at SourceForge in a Subversion repository. If you have Subversion installed, you can checkout the code with the following command:

svn co https://ucov.svn.sourceforge.net/svnroot/ucov ucov

If you are running UniData or UniVerse on Windows, I highly recommend you install Tortoise SVN as it greatly simplifies working with Subversion.

On the SourceForge site you will not only find the Subversion repository for all the code, but also ‘Tracker’ which will allow you to submit Feature and Bug tickets. If you need help with anything, you can submit a Support Request as well.

If you wish to contribute to the code or documentation, you can introduce yourself on the Developer Forum. The best way to submit code or doc is by generating a Diff of the changes, as well as what the behaviour was before the change and what it was after the change.

When you have used UBC, be sure to fill out a Review. All constructive input is welcome and appreciated!

Making Life Easier

Every developer worth their salt has little snippets of code that they use to make their life easier.

So today, I thought I’d share a little utility that I use all the time.

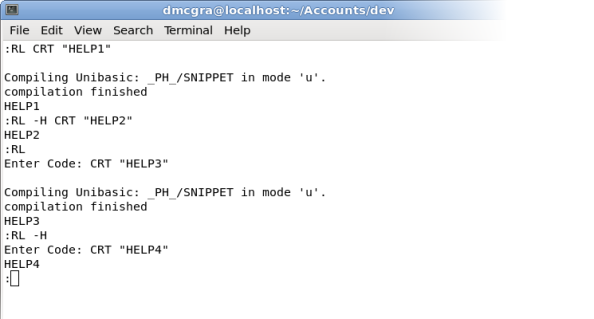

Readers, meet RL. RL, meet readers. RL (Run Line) allows you to run a single line of code to see what it does. Typically, I use this as a quick way to check out an OCONV express I haven’t used in a while or as a calc replacement if I don’t feel like tabbing out of the terminal. All you need to do is compile and catalog to enjoy its simpleness. RL supports 1 option, ‘-H’. This option allows you hide the compiler output if you wish.

To use RL, you can either enter the code as part of the command line arguments, or you can enter it as an input. Here is a screenshot of RL in action:

RL Utility

Disclaimer: I strongly recommend against implementing this on a production machine as it will allow arbitrary code execution. This code has only been tested on UniData 7.2.x. Feel free to use this code however you want. If you somehow turn this in to something profitable share the love and either buy me a beer or make a donation to an open source project.

Okay, so enough with the spiel. Here’s the code :

(Updated 2011-06-02 due to ‘<-1>’ being stripped)

EQU TempDir TO "_PH_"

EQU TempProg TO "SNIPPET"

OPEN TempDir TO FH.TempDir ELSE STOP "ERROR: Unable to open {":TempDir:"}"

* Determine if we should hide compiler output

* Also determine the start of any command line 'code'

IF FIELD(@SENTENCE, " ", 2) = "-H" THEN

HideFlag = @TRUE

CodeStart = COL2() + 1

END ELSE

CodeStart = COL1()

IF CodeStart = 0 THEN

CodeStart = LEN(@SENTENCE) + 1 ;* Force it to the end

END ELSE

CodeStart += 1 ;* Skip the Space

END

HideFlag = @FALSE

END

* Get the code from the command line arguments, or

* Get the code from stdin

IF CodeStart <= LEN(@SENTENCE) THEN

Code = @SENTENCE[CodeStart, LEN(@SENTENCE) - CodeStart + 1]

Code = TRIM(Code, " ", "B")

END ELSE

PROMPT ''

CRT "Enter Code: ":

INPUT Code

END

* Compile, catalog and run the program

* We only catalog it so that @SENTENCE behaves as you would expect

WRITEU Code TO FH.TempDir, TempProg ON ERROR STOP "ERROR: Unable to write {":TempProg:"}"

Statement = "BASIC ":TempDir:" ":TempProg

Statement<-1> = "CATALOG ":TempDir:" ":TempProg:" FORCE"

IF HideFlag THEN

EXECUTE Statement CAPTURING Output RETURNING Errors

END ELSE

EXECUTE Statement

END

EXECUTE TempProg

* Clean up time

DecatStatement = "DELETE.CATALOG ":TempProg

EXECUTE DecatStatement CAPTURING Output RETURNING Errors

DELETE FH.TempDir, "_"TempProg

DELETE FH.TempDir, TempProg

STOP