Archive

Data Integrity

One of the features not present in UniData that you many have become used to in the world of SQL is referential integrity.

Data is one of the most valuable assets of a company. If only for this reason alone, it should be treated with the utmost respect and professional care. Everybody knows that backing up data is essential, but what data are you backing up?

If the data is already corrupt you’re in a whole world of hurt. How long has it been it corrupt? Has it corrupted other data? Has it already impacted the business and to what extent? You can’t just restore from several months ago. You have to spend the time manually working out what went wrong, how to fix and potentially trawling through backups to find data to reinstate.

Here I should clarify exactly what I’m referring to by ‘corrupt data’. I’m not talking about OS-level corruption; from here on I will be talking about 2 types of logical corruption:

- Breaking Referential integrity

- Breaking Domain integrity

Unlike the major databases (such as MSSQL, Oracle and MySQL) UniData and UniVerse do not have logical data integrity constraints supported in the database layer. This leaves it up to each individual application to ensure data integrity.

Anyone working with databases knows that bugs (both current and of old) can result in logical inconsistencies creeping into your data. The more denormalised your data, the higher the chance for this corruption.

Some of this corruption will become apparent immediately because a process will fail and require you to locate and fix both the cause of the corruption as well as the corruption itself. Surprisingly, these are not the ones you should be most worried about. The worst are the ones you don’t notice, because they don’t cause the system to visibly malfunction. These are the worst because they can fester in your system for years, silently corrupting data that is derived from it and potentially impacting business decisions. Soon the data itself will become much harder to repair since needed information may no longer be readily at hand. If/when these eventually cause a problem, it will be much harder and time-consuming to address, if even possible.

Since we have to handle logical data integrity entirely in the application layer, U2 databases are somewhat more susceptible to these issues from code bugs. To combat this, there are 2 methods I propose you adopt.

The first is a Data Integrity Audit (DIA) you can schedule regularly in production. This validates your data and reports on any inconsistencies it encounters. This helps you identify issues earlier and potentially help track down the programs/conditions that are causing the corruption. We have already implemented this system for ourselves and I’ll explain how we did it below.

The second method is based on the above DIA. Modifying it to run from file triggers, you can implemented a system to use while testing (Unit, System and at User Acceptance Testing) that can report exactly what program/line is writing the corrupted record as it happens. Catch it BEFORE it reaches production! However, I don’t recommend actually implementing this into production (at least, without great care/load testing) since it will have performance implications that may be unacceptable.

Implementing a solution

Alright, enough of the prelude. Lets talk about implementing a DIA program in to your system. It isn’t as hard as you might think and it can be set up incrementally so you can cover your most important data first.

The system has 4 parts to set up:

- Defining the Rules

- Storing the Rules

- Checking the Data

- Reporting on Violations

Defining the Rules

The first step is the logical rules that should be constraining your data. The rules will fall into 2 categories:

- Referential integrity: Identify any attributes that are foreign keys (or lists of foreign keys)

- Domain integrity: Specify the ‘domain’ of the field. This includes type (alpha, numeric, etc), enumerations, length, and if NULL is allowable.

Looking at a few of your key tables, you should be able to quickly identify some basic rules your data naturally should abide by. Write these down as these will be some easy rules to start testing.

Storing the Rules

The second step is determining how to store the rules. Although you can do this however you want, there are several reasons that make using the dictionary file ideal:

- Placing the constraints in with the schema (both are structural metadata). Collocation is a good thing.

- Attribute 1 can store anything after the type; it allows you to store the constraint directly with the section of the schema you are constraining!

- X-Type Attributes allow you to use enumerations (part of domain integrity) while still keeping them defined in the schema, instead of elsewhere.

- It allows you to easily test and/or enforce the constraints with the ‘CALCULATE’ function (more on this later)

So, how exactly do you store the constraints in with the dictionary records? Here is the following scheme we use:

TYPE [FKEY filename [PING f,v,s]] [MAND] [ENUM enum_item]

- FKEY: Foreign key to ‘filename‘

- PING: Checks for @ID in the foreign record location <f,v,s>

- MAND: Value cannot be NULL

- ENUM: Value must be an enumeration in the dictionary X-type record ‘enum_item‘

When attribute 6 of the dictionary item indicates that the data is a multivalued list, FKEY, MAND, ENUM and DATATYPE should adhere to it and treat the each item in the list separately. The only special case is MAND, which only causes a violation when a multivalue in the list is empty. That means it does not cause a violation when there is no list at all. If you want to cover this you can create another non multivalued dictionary item as well and apply the MAND rule to it.

Checking the Data

The third part is how you will test/enforce these constraints:

- Production: A program, that given a filename, reads in the dictionary items and associated constraints. It can then test each record and report any violations. This would typically be run as part of a nightly job, and/or if you are set up for it, on a backup/restore of production onto a development machine.

- Development: An update trigger subroutine that is only implemented on development. This also allows you to transparently test if new or modified code is corrupting your data before it even makes it into production. Although this would typically not be implemented into your actual production system due to performance impacts, there is no technical reason that it cannot be done if so desired (even just for selected files)

These methods are not mutually exclusive and are designed to cover different situations. The first is a post corruption check that allows you to identify issues faster than you normally would. The second allows you to provide better test coverage and reduce the risk of introducing faulty code into your production system.

Reporting the Violations

The fourth and final part of the system is how you report it.

There are many options you many want to consider depending on your needs and which of the 2 options above you are considering it for.

We decided upon a non-obtrusive option that allowed us to build either reports or select lists from the results. This method requires you to create a new file to store the results. For the sake of this article, let us call it DIA_RESULTS. You can clear this file just before running the DIA program, or performing tests if you are using the trigger method.

In DIA_RESULTS, each record should contain the following information:

- Date failed

- Time failed

- Filename the violation was on

- Key the violation was on

- Dictionary item used when the violation occurred

- Rule name the violation occurred on

- The value that caused the violation (just in case it changes before you get to it)

- If from a trigger, the current call stack

Using this information it is easy to print off reports, create select lists to get to the records and to determine exactly what was wrong in the data.

Optimising the set up of your UniData data [Part 1]

One of the benefits with U2 Data servers is that it can be extremely quick to turn-around a new system. The unfortunate downside is that this makes it extremely easy to ignore the architecture of your system. This can lead to future system performance issues and harder to maintain programs.

Here I’ll be looking at the set up of your files and records (tables and columns for those still grasping UniData/UniVerse). Your system revolves around your data, so if you don’t get it right to start with it inevitably leads to a sub-optimal system. What I won’t be discussing here is the usual modulo/block-size related maintenance of your files; there is already literature in the manuals for this topic.

To start with, you should have already read my previous post about correctly setting up the layout of your files and the need to create all the relevant D-type dictionary items. With that in mind, I have a story for you…

This story is about Johnny and Alicia, who are both admin staff working for a sales company back in the 1930’s. Both have a large set of contracts that they store in folders in a filing cabinet.

Occasionally their managers will ask them to find a contract that is being handled by a certain sales rep. Although they hate this task, each time they manually search through the stack of contracts to retrieve it. Funnily enough, in the time it takes Johnny to find one, Alicia can usually find at least two.

Curiosity gets the better of Johnny who eventually asks Alicia how she was so fast.

“It’s easy, I have moved the page with the sale rep’s name to the front of the contract”

Dang! So simple! Johnny realised having to dig ten pages deep on each contract was so senseless!

Fortunately, admin staff can now use digital retrieval systems, so they don’t have to think about this sort of small detail any more. The need to pay attention to this detail hasn’t gone away though. Now it rests with us.

Not only should you ensure the layout of data is in the correct format, but you should also pay attention to the order of your data. It should be organised with the most frequently searched upon and utilised data earlier on in the record. Since the record fields are separated by delimiters, using and querying later attributes requires the engine to scan every character up until to the requested attribute to determine where it starts. By moving the most frequently used data to the being of a record, you reduce the amount of work required to initial find the data.

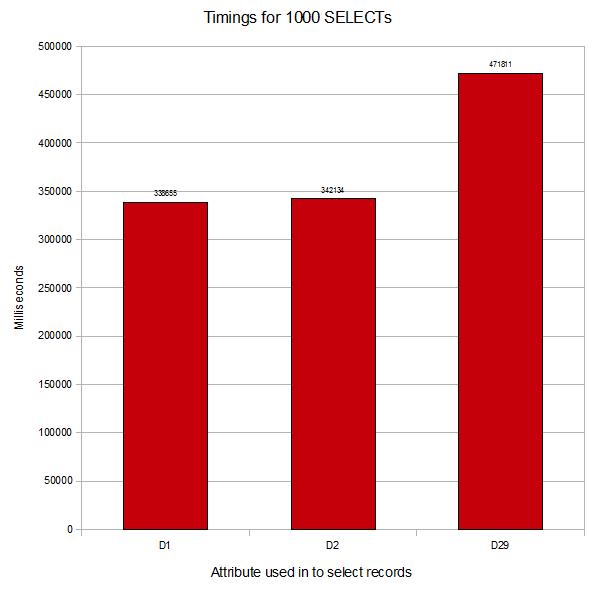

Here are some timings from a simple test run I performed on our system.

The setup: A file with modulo 10007, pre-filled with records keyed from 10000 to 99999. Attributes 1, 2, … up until 29 are each set to the key. I have created a D-type attribute for each one timed (D1, D2 & D29).

The test: Perform a select on the file with the attribute equal to a value (E.g. SELECT TIMINGS WITH D1=”12345″). Repeat this 1000 times for each attribute tested.

Results:

Data in <1>: 338655 (100.00%)

Data in <2>: 342134 (101.03%)

…

Data in <29>: 471811 (139.32%)

Even with these small records, you can see the difference you can achieve by having your data in the correct order. Scale this up to larger files with bigger records, more complex select statements combined with the processing of these records in your subroutine and it can provide a significant difference in the execution times across a system.